PoLaRIS-Dataset

Why PoLaRIS-Dataset?

Unmanned Surface Vehicles (USVs) require precise object recognition for safe navigation but face challenges such as irregular lighting and unpredictable obstacles. However, existing maritime datasets have two major limitations:

- Sensor Reliability: Poor performance under challenging lighting, with limited multi-sensor integration.

- Dynamic Object Tracking: Insufficient tracking of long-range or small objects, critical for USV collision avoidance.

To address these issues, PoLaRIS-Dataset introduces the following key contributions:

- Multi-Scale Object Annotation: Manual refinement of large and small object annotations, initially generated by an object detector.

- Dynamic Object Tracking: Comprehensive tracking annotations to enhance navigation performance.

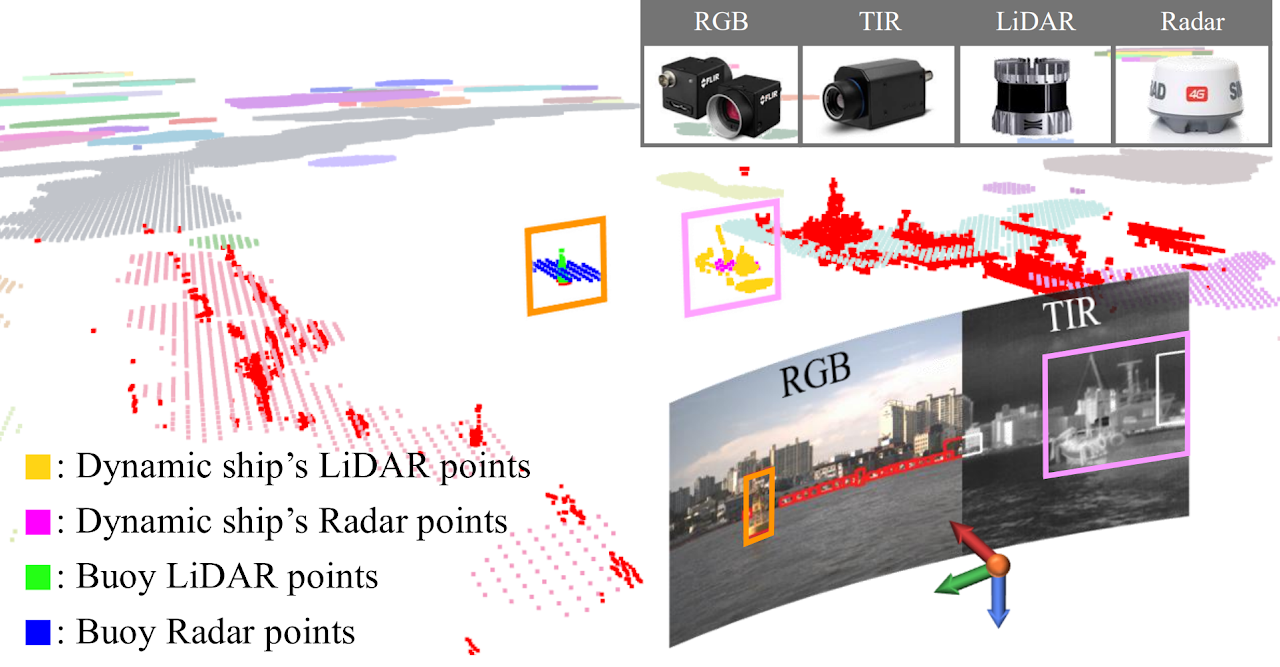

- Multi-Modal Annotations: Stereo RGB, Thermal Infrared (TIR), LiDAR, and Radar data annotated using a semi-automatic, human-verified process.

- Benchmark Validation: Evaluations with conventional and SOTA methods demonstrate the dataset's effectiveness in maritime environments.

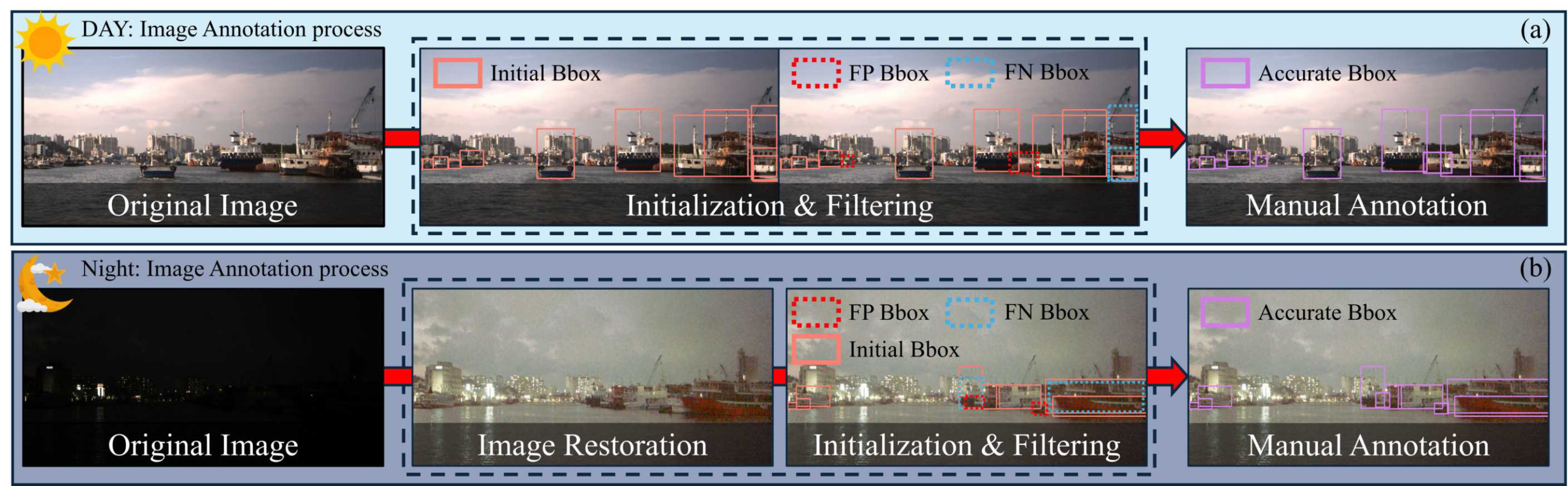

Image Annotation Process

Stereo Left Camera (RGB)

Daytime

- One image is manually sampled every 20 images to create a training set.

- Remaining images are initialized using YOLOv8 detections, with overlapping boxes (IoU ≥ 0.8) removed.

- All small objects (occupying less than 5% of the image size) are manually annotated.

Nighttime

- Low-light images are enhanced using a diffusion-based GSAD method instead of relying on noisy TIR images.

- Manual annotations are performed on the enhanced images.

Stereo Right Camera (RGB)

- Initial bounding boxes are transferred from the left image annotations.

- Manual corrections are applied to account for differences in field of view (FOV).

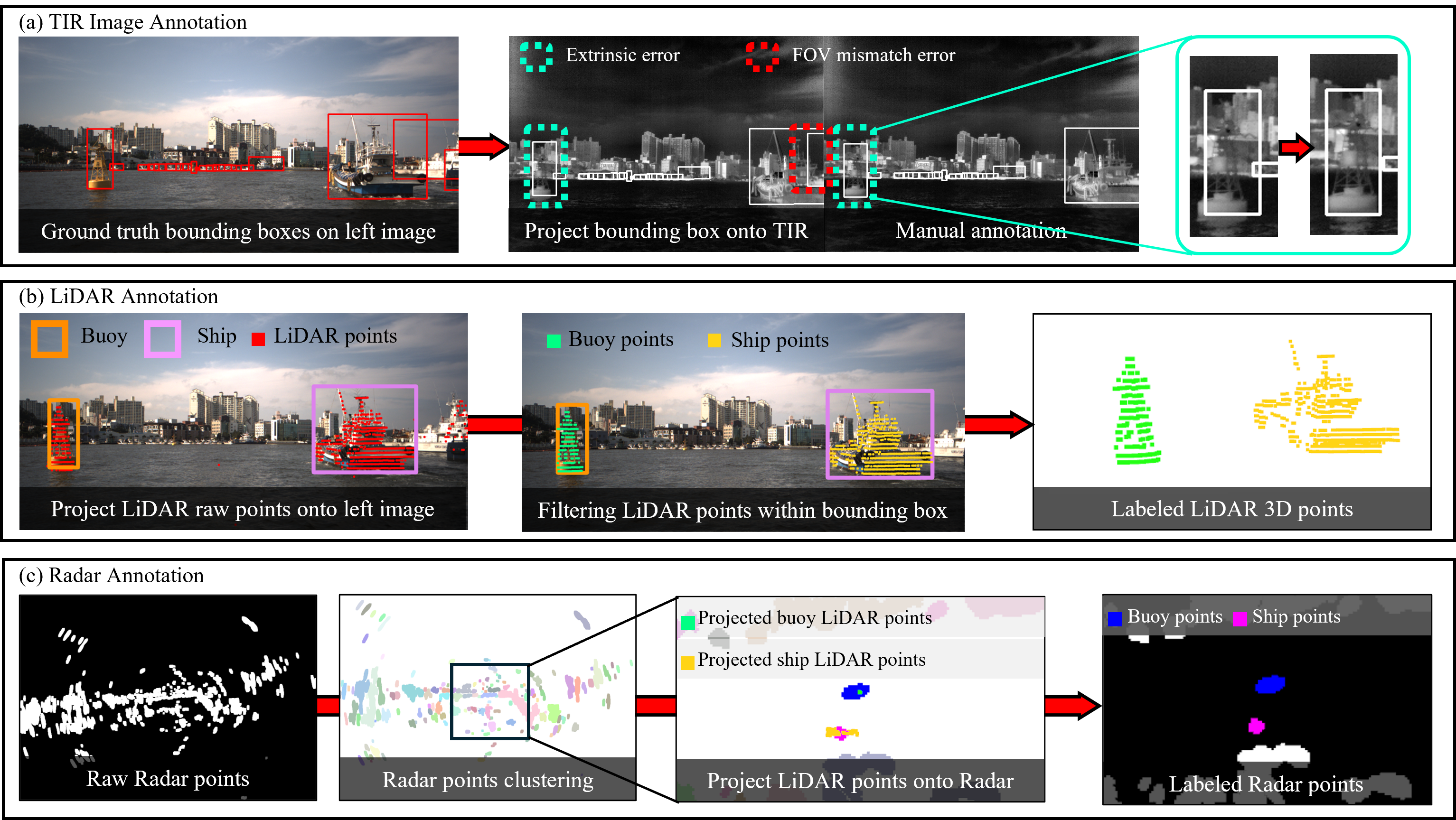

Semi-Automatic Annotation

TIR Images

- Annotations are transferred from the left camera using extrinsic parameters.

- Transformation formula:

- Manual corrections are required to address FOV differences and transformation errors.

- 16-bit TIR images are converted to 8-bit using Fieldscale for better visualization before manual refinement.

LiDAR Points

- LiDAR points are projected onto the left image based on extrinsic calibration.

- Points outside the bounding boxes are filtered out.

- Manual verification ensures accurate object shape representation.

Radar Points

- Radar annotations are generated through fusion with LiDAR data.

- The annotation process includes:

- Converting LiDAR-labeled points into Radar BEV coordinates.

- Clustering Radar points using the DBSCAN algorithm.

- Assigning annotations by matching Radar clusters with labeled LiDAR points.

- This approach reflects Radar's characteristics and enhances annotation accuracy.